Chrome AP Firmware Embraces to x86_64 Architecture

Executive Summary

This document details the successful implementation of 64-bit boot support (x86_64 architecture) in Chrome AP firmware to boot ChromeOS devices (i.e., Chromebook, Chromebox etc.) . The primary motivation for this transition was to overcome the 4GB memory limitation of the traditional 32-bit architecture, which is increasingly insufficient for modern hardware demands.

Key technical changes faced during enabling 64-bit mode in various boot phases, including Cache-as-RAM (CAR) mode, and ensuring compatibility with SoC APIs and the payload (libpayload and depthcharge for booting ChromeOS). A unified entry point for both 32-bit and 64-bit modes was implemented in libpayload, and depthcharge was modified to support 64-bit compilation.

Comparative analysis between 32-bit and 64-bit builds showed an expected increase in SPI flash size by approximately 0.3MB (to support 64-bit architecture), but no significant impact on boot performance. This confirms that the transition to 64-bit architecture is feasible without performance regressions, paving the way for future ChromeOS devices using Intel SoC platform.

Objective

This document captures the journey of adding 64-bit boot support to the Chrome AP firmware, which involved adopting the x86_64 architecture.

Background

Traditionally, the most popular x86 architecture supports the 32-bit architecture meaning the flat address space is limited to 4GB and need to enable remapping/physical to virtual memory mapping if wish to access memory above 4GB. The first x86 processor that introduced 32-bit architecture was the Intel 80386, also known as i386. It was released in 1985 and marked a significant advancement in the x86 line of processors.

- The i386 could address up to 4GB of physical RAM memory.

- 32-bit Registers and Data Path allowed for faster calculations and manipulation of data.

- Protected Mode, initially introduced in the 80286 processor, extended the addressable memory space significantly. This enabled the implementation of a robust memory management system, facilitating virtual memory and enhancing protection against software crashes

The primary constraint of the 32-bit architecture is that it can only address a maximum of 4GB of RAM (2^32 bytes). This limitation became increasingly problematic as software and operating systems became more demanding, requiring more memory to function properly. Additionally, processors are becoming more complex, and more advanced IPs (Intellectual Property) such as AI (Artificial Intelligence) accelerators, USB-C controllers, video, and image processing units are expected to consume more system memory to operate. System memory <4GB is already occupied by existing hardware resources (IPs), system software, etc. As a result, SoC programming logic is unable to meet the hardware resource requirements with advanced SoC IPs, which is another reason why the 32-bit CPU architecture is unable to meet the requirements of advanced use cases in 2024.

In comparison, a 64-bit architecture can theoretically address a much larger amount of physical memory (2^52 bytes or roughly 4092 terabytes) and virtual memory (2^48 bytes, or roughly 256 terabyte). While this is far more than any current system would ever need, it successfully removes the memory limitations imposed by 32-bit systems.

- 4GB maximum addressable RAM in 32-bit vs. virtually unlimited in 64-bit.

- A 64-bit architecture can potentially process data faster due to larger registers and wider data paths.

- Backward compatibility between 32-bit and 64-bit software is also possible.

- The introduction of long mode with page-table enforces the security in system software where flat memory access seems prone to attack.

64-bit architecture for personal computers was first introduced in the early 2000s. The widespread adoption of 64-bit architecture in consumer-level desktops and laptops began in the mid-to-late 2000s with the release of operating systems like Windows Vista 64-bit and the increasing availability of 64-bit processors. System firmware also adapted towards 64-bit mode of booting even in the client segment as EDK2-based firmwares are largely leveraging the 64-bit infrastructure changes coming from the server ecosystem.

Overview

Due to the recent developments in Intel SoC architecture, including the integration of discrete tiles and the reorganization of SoC IPs, there has been an increasing need for more hardware resources. This is necessary to support improved performance and more efficient communication with reduced latency. For example, if a SoC IP/subsystem requires more system memory (than traditional ones) to map the entire device-specific register space. This space can be easily allocated above 4GB of memory if the underlying architecture supports it. However, for system firmware where accessing more than 4GB is not feasible, the SoC must support a special method to provide a window for accessing the same hardware register spaces within less than 4GB of memory boundary. Implementing a special method in SoC designs is costly and necessitates specific security enforcement. Suppose a situation where such special treatment is not feasible in the future SoC roadmap to map device register space below 4GB will no longer be supported. In this case, one possible solution is to enable more than 4GB of memory access, allowing the device register space to be mapped without incurring additional SoC costs.

This is not a significant concern for SoC vendors/ODMs/OEMs/IBVs utilizing EDK2-based system firmware (UEFI) because the 64-bit boot mode has been enabled and supported by Independent BIOS vendors (IBVs) for Windows and Linux-based client devices for several years. However, the UEFI implementation of booting x86_64 architecture is limited to specific boot phases. The early phases like Security (SEC) and Pre-EFI Initialization (PEI) are executed in 32-bit mode, and advanced stages like Driver Execution Environment (DXE) and Boot Device Selection (BDS) are solely executed in x86_64 mode.

Unfortunately, the system firmware (coreboot) used in Google ChromeOS devices has limited support for the x86_64 specification and does not have widespread support. Currently, x86_64 is only available on emulators (Qemu x86_64 board) and a few limited hardware platforms as an exploratory effort. A few specific 64-bit features have been added under the HAVE_X86_64_SUPPORT Kconfig, including:

- Generating static page tables with entries pointing to Page Directory Pointer Entry (PDPE), PDPE with entries pointing to Page Directory Entry (PDE), and PDEs with 512 entries each.

- Loading the Global Descriptor Table (GDT) to access more than 4GB of memory.

- Calling into SoC blobs/APIs in 32-bit mode by following thunking (switching from 64-bit mode to 32-bit mode).

- Transferring control to the payload only in 32-bit mode, where the payload binary should be loaded into less than 4GB of memory. During the jump into the payload, coreboot will transition from long mode to protected mode.

The rationale behind supporting x86_64 boot mode for future Intel SoC platforms is to ensure that hardware resources can be accessed without limitations. Therefore, the goal of AP Firmware used across ChromeOS devices is more ambitious than the current offerings of coreboot regarding x86_64 support. The following is a list of objectives that Chrome AP Firmware aims to achieve when claiming that x86_64 architecture support is production-ready:

- Ability to switch to 64-bit while operating in Cache-as-RAM (CAR) mode, including validating CAR mode operation in long mode and enabling paging with a large 1GB page table.

- Support for SoC blobs/APIs in 64-bit mode, particularly the ability to call into Firmware Support Package (FSP) API entry points without switching between protected mode and long mode upon exit, thereby reducing latency.

- Exception handling should adhere to the x86_64 architecture specification across all differnt stages of the firmware boot (like boot firmware and/or payload).

- Switch to the payload in long mode without thunking, leveraging libpayload in long mode instead of the traditional approach where coreboot always switches into the payload in protected mode. Design the coreboot to libpayload entry point in a scalable manner to allow more flexibility when switching between coreboot and payload. Validate all below modes of switching between coreboot and libpayload:

- Support traditional 32-bit mode of switching.

- Allow thunking from coreboot running in long mode but jumping into the payload in protected mode and eventually transitioning into long mode inside the payload.

- Support x86S mode of booting as well (for future SoC/FW readiness). - Migrate all debug interfaces, such as the GDB (GNU debugger), firmware shell (pre-boot CLI environment in Chrome AP firmware) etc. to support 64-bit mode of operations.

- Conduct platform validation, Functional Automated Firmware Test (FAFT), and Targeted Acceptance Support Test (TAST) to ensure that x86_64 is as stable as traditional x86_32 mode of booting.

Detailed Implementation

This section aims to highlight the scattered changes made in the details across various boot phases, both within coreboot and the payload. Currently, the majority of x86 platforms hosted within coreboot projects support 32-bit architecture. Previously, there were limited experimental efforts to add 64-bit architecture support to the coreboot tree. This proof-of-concept work (performed using a Chromebook platform) extends those efforts to ensure that the x86_64 architecture support in coreboot is stable and well-tested.

Responsibility of x86_64 Kconfigs

In order to maintain support for the x86_64 (64-bit) platform alongside the current ecosystem, USE_X86_64_SUPPORT and HAVE_X86_64_SUPPORT Kconfigs are employed. The purpose of this document and the proof-of-concept work is to guarantee the x86_64 boot mode using the Intel Meteor Lake platform, even though supporting x86_64 boot using the coreboot tree is still in the experimental stage. This is done to prepare the Chrome AP firmware stack for future SoC generations from Intel.

To start coreboot in 64-bit mode, the ARCH_ALL_STAGES_X86_64 Kconfig option is enabled by default when selected. This allows coreboot to run in long (64-bit) mode. All coreboot stages are compiled using a 32-bit toolchain by default, but enabling this option switches to a 64-bit toolchain for all stages.

The Kconfig option USE_X86_64_SUPPORT becomes enabled when HAVE_X86_64_SUPPORT is selected. This selection ensures that all boot phases, including bootblock, verstage (if enabled), romstage, and ramstage, are compiled in long mode."

A 64-bit build of coreboot boot phase picks the Cflags/Linker scripts that are required for x86_64 architecture to generate executable binary in “elf64-x86-64” format.

Moreover, x86_64-specific Kconfig options ensure that the low-level assembly files designed for long-mode operations are chosen over the ones for the native 32-bit implementation mode. For instance, the low-level operations memcpy, memset, and memmove have inherent differences in their operations between 32-bit and 64-bit architectures hence, to implement mem memory move operation in long mode, memmove_64.S file has been picked over memmove.S .

x86_64 support in Cache-As-RAM

During the power-on reset process of an x86 system, the x86-CPU begins in real mode (16-bit) and eventually transitions to protected mode (32-bit). To enable support for x86_64 long mode, it is crucial to transition the CPU from protected mode to long mode as soon as possible. There is a specific sequence of steps that must be followed to successfully perform this transition.

Enable PAE (Physical Address Extension):

Set the PAE bit in the CR4 control register, enabling 4KB paging and expanding the available physical address space.

Enable Long Mode in EFER:

Set the LME (Long Mode Enable) bit in the EFER (Extended Feature Enable Register), signaling the processor that Long Mode is desired.

Load a Valid PML4 Table:

Load the CR3 register with the physical address of the PML4 (Page Map Level 4) table. This is the root of the page table hierarchy for Long Mode.

Enable Paging:

Set the PG (Paging) bit in the CR0 control register, enabling paging. This is a requirement for Long Mode.

Far Jump to Code Segment:

Perform a far jump instruction to a code segment with a Long Mode compatible descriptor. This jump completes the transition into Long Mode.

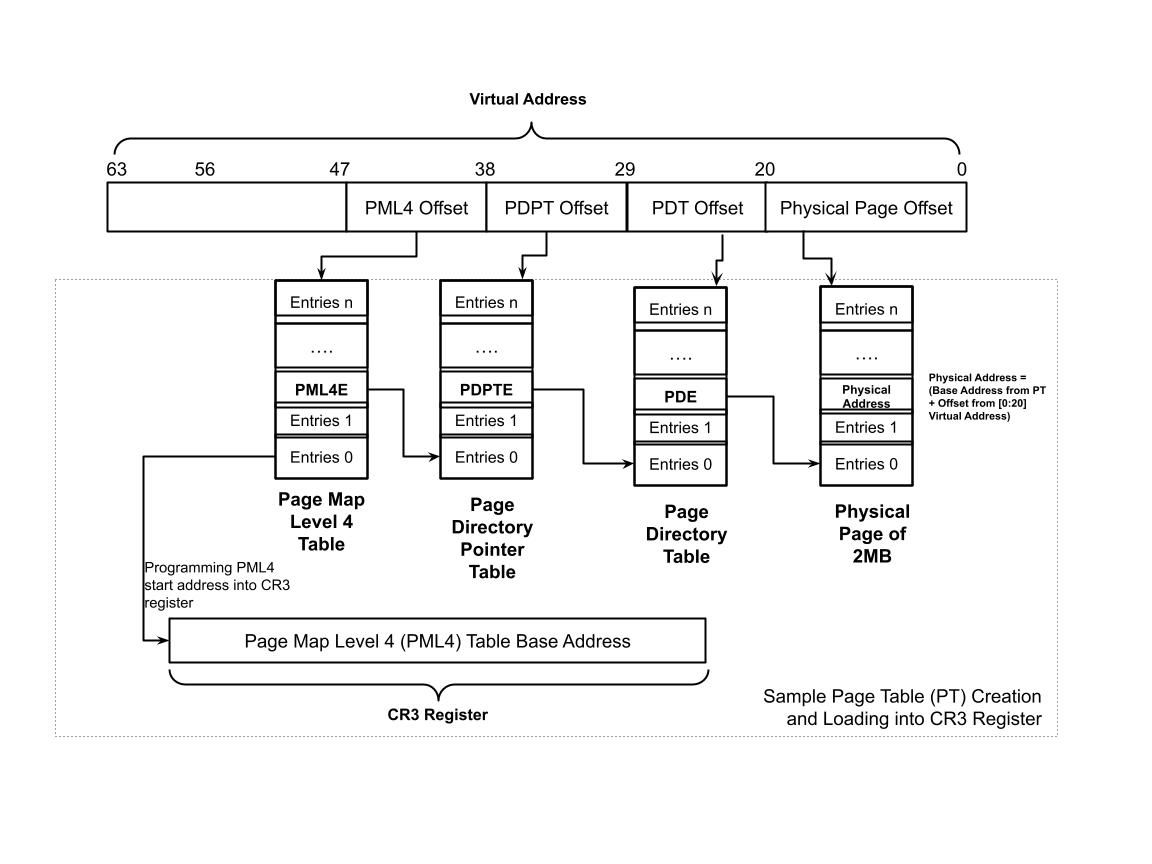

In x86-64 long mode, the page table is vital for translating virtual addresses to physical addresses. They remain crucial for memory management, even in absence of physical memory (aka in CAR mode). A CPU core seeking data from a virtual address initially checks the TLB (Translation Lookaside Buffer), a cache for recent page table entries. If the entry is found, translation is rapid. However, a TLB miss triggers a traversal of the multi-level page table hierarchy. The process starts from the PML4 table (Page Map Level 4) down to the page table entry with the physical address. In the cache-as-RAM scenario, this page table walk retrieves the necessary entries from the cache, emulating regular RAM behavior. IA common code that manages setting up CAR mode, is also responsible to perform long mode transition and setting up the page table.

Early boot phase is also responsible for loading the Global Descriptor Table (GDT) in 64-bit mode that supports >4GB address accesses and exception handling.

Page Table (PT) in coreboot

Page Table in 64-bit architecture is used to bridge between virtual to physical memory access, which is also additionally meant to provide security. Each entry in the page table maps a virtual page to a physical frame, storing additional information like access permissions and caching attributes.

coreboot supports two different types of page table creation logic as below.

- Supporting Large (1GB) Paging: Each page entry in the page table maps a 1GB chunk of virtual memory to a contiguous 1GB region of physical memory. In x86-64, 1GB pages typically bypass the Page Directory Table (PDT) level of the page table hierarchy, mapping directly from the Page Directory Pointer (PDPT) Table to the physical page.

- Supporting Small (2MB) Paging: Each page entry maps a 2MB chunk of virtual memory to a contiguous 2MB region of physical memory. It utilizes all four levels of the x86-64 page table hierarchy: Page Map Level 4 (PML4), Page Directory Pointer Table (PDPT), and Page Directory (PDT).

Figure 1.0 shows the virtual to physical memory mapping in a long mode operation.

In the above example, coreboot creates a static (as physical memory is not yet available) Page Table (PT) and then programs the Page Map Level 4 (PML4) entry into the CR3 register (during the long mode entry the address of the PML4 has been programmed into the CR3 register).

For best software practices, 1GB pages can result in more efficient TLB usage due to fewer entries being required to cover the same amount of virtual address space. This can lead to faster translations and reduced overhead. If CPUID 0x80000001, EDX bit 26 is set to 1, it signifies a 1GB page size is supported. A recent change in the coreboot (!NEED_SMALL_2MB_PAGE_TABLES) attempted to ensure that the default page table creation follows the larger (1GB) paging.

Calling into SoC APIs (blobs) in long mode

On x86 platforms, all SoC programming is limited to executing the silicon vendor provided proprietary blob model. In this blob model, SoC programming starts from a coreboot call into the respective blob entry point. The integration between coreboot and SoC binaries follows the API (Application Programming Interface) model. Historically, the communication between coreboot and SoC binaries (aka FSP) API follows de-facto standards of 32-bit mode of calling conventions.

For example, the table below shows the code snippet for calling into Intel FSP-S (Silicon Init API). The default behavior is to call FSP APIs in protected mode (if the PLATFORM_USES_FSP2_X86_32 configuration is set), even if coreboot is compiled in 64-bit mode. The primary reason for this behavior is that the FSP specification and blobs do not support native 64-bit operations.

The FSP 2.4 specification for Intel next generation processor (post Meteor Lake) supports transfer of the control between coreboot to FSP in long mode. This proof-of-concept works using Intel Meteor Lake based reference design “Rex64”, adapted to the FSP2.4 specification and 64-bit FSP blobs to be able to call into FSP APIs in direct long mode w/o thunking into 32-bit mode.

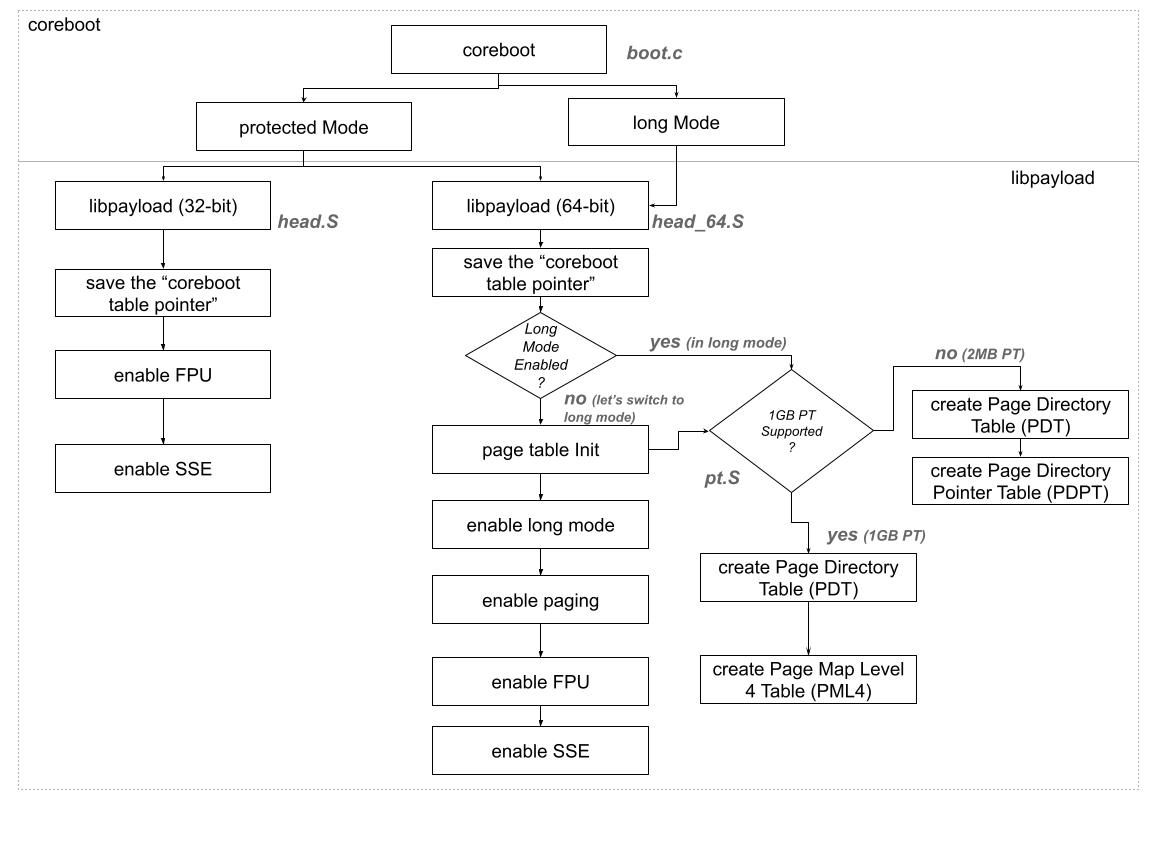

Transferring Control from coreboot to libpayload

The execution of each stage of coreboot is handled by arch_prog_run implementation found in the boot.c file. The transition to the next stage's entry point is determined by the operating mode of the current stage. For instance, programs running in 64-bit mode enter the next stage's entry point in long mode.

When coreboot decides to pass control to the payload, the above mentioned logic does not apply. The libpayload, a crucial layer in the boot process, bridges the communication gap between the boot firmware (coreboot) and the payload responsible for booting a specific operating system.

Libpayload plays a fundamental role during the boot process to the ChromeOS-specific payload (depthcharge) by making platform-centric information accessible to the payload. The control transfer between coreboot (ramstage) and libpayload always operates in protected mode. This protected mode layer guarantees that the libpayload's entrypoint implementation only supports 32-bit operations. Consequently, payload memory access and operations are limited to 32-bit addressing, restricting access to resources beyond 4GB.

In the next section, we will take a detailed look at the modifications made to the entry point of libpayload. This POC work (w/ below code change) allows coreboot to seamlessly switch between different modes (long and protected mode) based on the type of payload. This flexibility is important for validating different scenarios in the current and future scenarios (especially with the introduction of X86S).

Libpayload: Unified Entry Point for x86_32 and x86_64 mode

We have added x86_64 architecture specific CFlags, Linker Scripts, Tools chains etc. into the libpayload build system, similar to the approach followed previously in coreboot to add support for x86_32 architecture.

Along with other key changes made by CB:81968, the primary item that has been done in current implementation is refactoring the existing libpayload implementation for x86 architecture to keep both 32-bit and 64-bit support in parallel. Hence, added ARCH_X86_32 and ARCH_X86_64 Kconfig under the main ARCH_X86 architecture Kconfig. This effort allows all required architecture specific changes to be independent from each other.

As discussed in the previous section, the major limitation of the existing libpayload implementation was it only supported protected mode operations. Hence, the key feature being added by this work is to be able to support unified entry point implementation for 32-bit and 64-bit mode of operation. This new implementation would allow coreboot to directly jump into payload in long mode withoutthunking.

The heart of the implementation revolves around low level assembly changes as below:

1. head.S/head_64.S

Depending on the underlying ARCH, the platform selects either ARCH_X86_32 or ARCH_X86_64 Kconfig while building the libpayload. For example: head.S is getting compiled upon selecting ARCH_X86_32 Kconfig and head_64.S while building libpayload in 64-bit mode (w/ ARCH_X86_64).

The primary role head.S file is to fill the “cb_header_ptr” variable which is a pointer and holds the address of the coreboot table. Now the function calling convention differs between protected mode and long mode hence, the way head.S should fill the “cb_header_ptr” also differs.

Below table explains which libpayload entry point implementation to call into depending on the nature of operation.

Let's follow the difference in operation between those two specific implementations of libpayload entry point assembly file.

Table: Comparison of libpayload entry point across different ARCH_X86_?? architectures

2. pt.S

In long mode, libpayload/payload operation requires page table creation. There are two main reasons why the libpayload entry point should perform this step during the coreboot transition to libpayload:

- Libpayload is built with ARCH_X86_64 Kconfig, while coreboot jumps into the payload entry point in protected mode. To switch back to long mode, libpayload must load the page table appropriate for the CPU architecture that supports large or small page tables.

- coreboot jumps into libpayload in X86S mode, and the goal is to enable paging up to 512GB of range using a 1GB page table. This is crucial to avoid on-demand paging while depthcharge attempts to wipe-off or access memory >4GB in developer mode. Without this implementation, depthcharge would need to implement on-demand paging between virtual and physical memory when accessing memory >4GB. This could introduce latency and require redundant page table programming within depthcharge, which is something we want to avoid.

The page table creation in libpayload follows a similar implementation as in coreboot. However, the coreboot page table creation relies on static page table entries, resulting in a larger binary size and a potential security risk. The benefit of using a static page table in coreboot is that it requires minimal assembly programming and more importantly coreboot cannot have a single, link-time known location for the page table that has written into the memory and remains valid throughout the entire execution (due to CAR teardown) where else, In libpayload, we do not have this issue because we have DRAM available and no further stage transitions (until we hand off to the kernel). One more limitation of coreboot page table creation is that it maintains two separate implementations between small (2MB) and large (1GB) pages which increases the code maintenance.

While implementing the page table in libpayload, we had considered several factors as below: coreboot can eventually jump into the libpayload entrypoint either in protected or long mode depending upon the different modes of operations and we still should be able to create the page table being compatible with the operating mode.

- Need to keep only one implementation that can dynamically support either 2MB or 1GB page table creation looking at the CPUID 0x80000001/EDX bit 26.

- The page table initialization function `init_page_table` is designed to function in both 32-bit protected mode and 64-bit long mode.

- The page table implementation will only utilize assembly instructions that have the same binary representation in both 32-bit and 64-bit modes.

- We compile with `.code64` to ensure the assembler uses the correct 64-bit version of instructions (e.g., `inc`).

- Additionally, we carefully utilize the registers:

- use 64-bit register names (like `%rsi`) for register-indirect addressing to avoid incorrect address size prefixes.

- It is safe to use `%esi` with `mov` instructions, as the high 32 bits are zeroed in 64-bit mode.

3. exception_asm_64.S

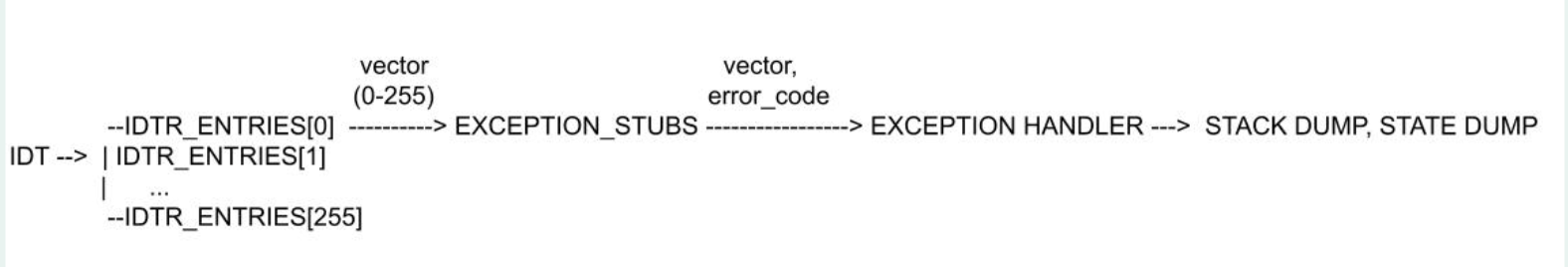

Exception handling is the process of responding to unexpected events, such as hardware errors or invalid instructions, that disrupt the normal flow of a program. For example: Divide by zero, Page fault, Machine check exception, Invalid opcode etc. Refer to payloads/libpayload/include/x86/arch/exception.h for more details about exception types.The Interrupt Descriptor Table (IDT) plays a critical role in this process. The IDT is a data structure that stores the memory addresses of different types of exceptions and interrupts, including their corresponding handlers. The IDT is indexed by the vector number of the exception, which is a value between 0 and 255. The IDT entry for a given vector contains the memory address of the corresponding exception handler, as well as a value that is used to determine the error code that is passed to the exception handler.

When an exception or interrupt occurs, the processor consults the IDT to locate the appropriate handler, which is a piece of code designed to address the specific event. This handler then takes necessary actions, such as logging the error, stack dump, hooking the GDB (GNU Debugger) or gracefully terminating the program.

There are basic differences between the size of the Interrupt Descriptor Table for 32-bit and 64-bit. On 32-bit processors, the entries in the IDT are 8 bytes long and form a table like this:

On 64-bit processors, the entries in the IDT are 16 bytes long and form a table like this:

When filling the interrupt table entries for a 64-bit system, we must consider three offsets: offset_0 (bits 0-15), offset_1 (bits 16-31), and offset_2 (bits 32-63). However, in a 32-bit IDTR, we do not need to account for the offset_2 field.

Depthcharge: Supporting x86_64 mode

Similar to libpayload, the depthcharge code changes also introduces ARCH_X86_64 (64-bit) and ARCH_X86_32 (for 32-bit) to keep two supported architectures in parallel as part of the payload support. Files necessary for 64-bit compilation are now guarded by the `CONFIG_ARCH_X86_64` Kconfig option.

Besides adding 64-bit architecture specific Kconfig and allowing to compile 64-bit implementations (.C and .S files) for the x86_64 architecture, this patch also modifies compiler flags to meet the stack boundary alignment requirements for 64-bit architecture. For example:

- -mpreferred-stack-boundary=2 --> Aligns to 4-byte boundary (2^2 = 4) for x86_32 (32-bit)

- -mpreferred-stack-boundary=4 --> Aligns to 16-byte boundary (2^4 = 16) for x86_64 (64-bit)

Similarly, we have encountered an interesting problem related to “firmware-shell” operating in long mode. While executing any in-built command inside the firmware-shell resulted in the exception. After debugging, we have concluded that the linker symbols of firmware-shell pre-built commands are not aligned to the underlying architecture. For example: while compiling the firmware-shell in x86_64 mode, the variable should be aligned to 8-bytes/16-bytes compared to the alignment requirement for 32-bit mode is 4-bytes.

Finally, libpayload implements arch_phys_map() function that maps virtual memory to physical memory for 64-bit in a more sophisticated way compared to the 32-bit implementation of arch_phys_map(). The 32-bit mode of implementation offers on-demand virtual addresses to a physical address and optionally invalidates any old mapping.

Transferring Control from Depthcharge to ChromeOS

Modern Linux operating systems support two different entry points while bootloaders plan to jump into the kernel entry point aka legacy protected mode and modern long mode. Traditionally, payload designed for CrOS performs a jump into kernel mode in protected mode. The only argument that it passes to the kernel entry point is “boot_params”. Below table provides the code snippet which has been executed by kernel, while transiting into the kernel entry point in protected mode.

This newer implementation relies on transferring control to the kernel entry point in long mode (which is 512-bytes apart from the kernel legacy entry point). The newer implementation relies on the kernel header data structure (e.g., an ELF header) that contains information about the kernel being loaded.

- xloadflags: A field within the hdr structure holding flags that describe the kernel's properties.

- XLF_KERNEL_64: A constant representing a flag indicating that the kernel is designed for 64-bit execution.

Eventually, the bootloader transfers the call into the kernel in long mode if “Kernel is 64-bit bootable” after looking into the XLF_KERNEL_64 flag is set within the xloadflags field.

Comparative Analysis

This POC work is not only helping to establish the fundamental block of x86_64 mode for Chrome AP firmware, which can be possibly used by Intel next generation SoC platform (aka Panther Lake). Therefore, it’s important to not only add foundational 64-bit code to create 64-bit binaries and be able to boot cleanly to the OS in x86_64 boot mode but also capture the comparative analysis between a platform in 32-bit mode vs the same platform supports 64-bit boot recipe as well.

We are able to create a 64-bit build for Rex (the reference platform based on Intel Meteor Lake generation) known as Rex64. We have completed the end-to-end measurement related to boot time and SPI size increase between Rex and Rex64 build.

Table: SPI Size Impact between Meteor Lake (32-bit) and Panther Lake (w/ N-1 aka Meteor Lake) due to 64-bit migration

Based on the above table, we are expecting to see ~0.5MB growth in the SPI flash due to migrating to x86_64 mode.

Unfortunately, we are unable to see any savings in the FSP and/or overall coreboot boot numbers w/ this planned toolchain migration. But at the same time, the boot numbers are in parity with the 32-bit boot numbers (aka no-regression).

Table: Boot Impact between 32-bit AP firmware and 64-bit AP firmware

Summary

The document outlines the process of updating the coreboot, FSP, libpayload and depthcharge codebases to support x86_64 (64-bit) mode in ChromeOS.

- Successfully enabled complete x86_64 boot flow using real hardware (Rex64).

- Highlighted the total changes across different boot phases to support x86_64 mode.

- It addresses the need for large page tables (1GB) to avoid on-demand paging and enable efficient memory wiping during developer mode.

- The page table creation in libpayload is designed to support both 32-bit protected mode and 64-bit long mode, using assembly instructions with the same binary representation in both modes.

- Depthcharge also introduces support for x86_64 and compiles 64-bit implementations for the x86_64 architecture.

- The document highlights the challenges faced in ensuring proper stack boundary alignment and handling firmware-shell commands in long mode.

- It concludes with a discussion on transferring control from depthcharge to ChromeOS, emphasizing the use of kernel header data structures to facilitate this transition.